The healthcare industry is a gold mine of data, from claims to hospital management to electronic health records. In the earlier days of data analysis, we had limited capabilities to process large data sets; but the cost of data storage has drastically decreased in recent years, enabling the big data revolution. A niche skill set, where its possessors repeatedly throw around the words big data and analytics in conversations, is required to translate this data into actionable insights. Thus, we see the rise of analytics professionals guiding the course for every data-intensive endeavor.

Before a healthcare organization can leverage analytics, it faces multiple ground-level challenges in working with data.

Most external data is either available as aggregated sets from government websites or buried under layers of healthcare compliance regulations. This leaves organizations with the daunting task of scouring the internet and accumulating. It’s essential to obtain this data in an abridged form before one can understand it, let alone learn and benefit from it. The large amount of data poses a challenge for the industry to identify relevant information and then mine it for organizational optimization. As one can imagine, the larger the data sets, the more information organizations have at their disposal to make strategic decisions.

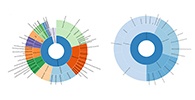

Data in healthcare organizations can originate from multiple sources, such as operational and financial data, electronic health records and data generated from a plethora of devices that monitor patient health (also known as internet of things). Organizations can combine these internal sources with data from external sources to generate a comprehensive picture of their patient demographic.

External sources include government census and healthcare public use files, made available by the Centers for Medicare & Medicaid Services (CMS), as well as private organizations, like Nielsen, Thompson Reuters that collect data and use it like a knowledge currency. The CMS data is complicated, has multiple formats and requires clean up before dissecting. If that doesn’t seem daunting for a seasoned data analyst, you might want to consider the Healthcare Insurance Portability and Accountability Act (HIPAA) compliance, which restricts the availability of CMS data to HIPAA compliant users. Many other players provide aggregated healthcare data for a fee.

The massive scale and numerous interacting entities have created a mesh of data where picking relevant, useful information is, in itself, a large task. Once identified, healthcare data has the potential to answer many questions and bring transparency to complex processes. To conduct any meaningful analysis, analysts have to combine data from multiple sources, and since each source uses unique formats and standards, it can be quite a complicated process. Combine it with the large volume of data and you have yourself a big data challenge. Often analysts find themselves spending 60% or more of their time cleaning and organizing data (commonly known as data wrangling) before using it to generate knowledge through building analytics models or visualizations.

So where do we start? We have to frame a specific problem statement and set quantifiable goals. This seemingly inconsequential task often holds the key to a successful project. Typically, when someone hears big data/ analytics they think of it as a remedy that will fix everything. However, just like any information system, it follows a cute little rule the tech community calls GIGO (garbage in garbage out)! If you don’t know what is it that you hope to achieve out of a big data endeavor, the system has no obligation to be right.

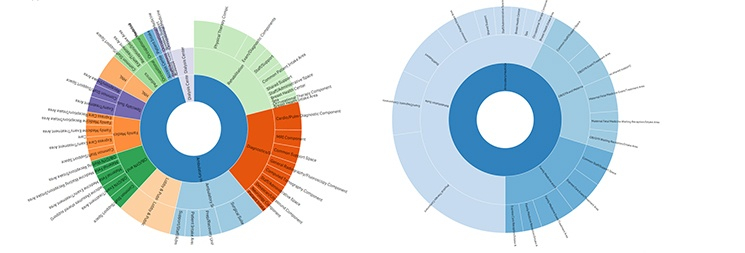

Focused data sets can tell interesting stories about an organization’s past and guide its future direction. Hospitals can make better-informed decisions using data. Information stored across long periods of time (a historic data warehouse) can help generate descriptive analytics, which analysts can use to understand a client’s current state. Based on this information, we can aid administrators in correcting their organization’s course of action and formulating new policies. Data-informed companies are moving toward preemptive action, or predictive analytics. A more-evolved approach integrates analytics into the regular workflow, or operational analytics, where the automated decision-making process uses specific rules similar to an email spam filter.

Data-based change is easier to implement; for instance, using hospitals’ stored information about their daily operation. Seemingly trivial information, like patient re-admission data, can help identify the various correlating factors responsible for re-admission. Using this information, systems can counter providers’ negligence and educate patients about the benefits of following prescribed preventive measures.

Once analysts complete all this, the collection of bits is ready to provide distilled operational insights, answering questions like “How do we compare to other hospitals” or “Which hospitals should we compare ourselves to?” Similarly, organizations may ask, “What is a reasonable patient volume that we can expect over the year?” or “What will be our market share, and how can we improve it?” Combining internal data with insights from external sources can give organizations a different perspective. This smart data can help filter all the noise, allowing clients to focus only on what is essential and actionable, which can help foster behavioral changes in the overall organization.